· 8 min read

Running Small Language Models using NPU with Copilot+ PCs

Leverage the power of the NPU to running Small Language Model (SLM) AI models on Snapdragon Elite X devices using Foundry Local, AI Toolkit and AI Dev Toolkit.

In my work, I have a focus on Microsoft 365 Copilot, but personally I had invested over a year ago into a Snapdragon device to personal local models and for a long time, I had struggled to get SLM models to run on the NPU. Why the NPU?, why not, Yes, you can run a very wide range of models on the CPU and if you have a decent GPU on that too; memory is a factor on how large of a model can run, but the Copilot+ PCs boasted running AI models, and until recently (after May 2025), the Windows 11 + Lenovo Drivers aligned for me to start running these now.

This is an updated post on running SLM Models on an Snapdragon Elite X NPU. Based on the original post, back in 2024: Running models using NPU with Copilot+ PC | pkbullock.com

My main purpose for trying out these types of models of local device to explore offline capabilities, controllable costs as these are not consuming cloud services, and integration to run PowerShell scripts that leverage the local device capabilities without having to be completely dependent on cloud services.

I have been experimenting with other 3rd party options, such as Ollama, Nexa AI, AnywhereLM, LMStudio and more, that some have the option to run on the NPU, for the sake of brevity, I will focus on the Microsoft tooling for now - but I encourage you to explore these tools, they are powerful and have great capabilities that could be more suitable.

Machine specifications

My machine is a Copilot+ PC which means is has around 40 TOPs+ of NPU compute power, in order to run models and meet the minimum Microsoft classification for the type of device. It wasn’t intended to be a high-end device; I personally just needed enough compute to run AI models but not necessary train or run super large variants such as gpt-oss-128b model.

- Lenono Slim 7x, Snapdragon Elite X Processors (X1E78100) includes an NPU

- Windows 11 Build: 26200.6899

- Driver version: 30.0.140.1000

Experience has taught me to be careful with running preview versions of Windows (Dev/Beta/Prerelease), as the drivers for the NPU may not align - there have been many times I’ve had to reset my PC back to factory settings on nearly all versions for various reasons - not just NPU related. OneDrive, GitHub and a list of WinGet commands as a gist are your friend for keeping work in the cloud and restoring devices quickly.

Note: I still feel much of the capabilities are still preview and I don’t feel that stable e.g. quite often these capabilities stop working between Windows updates, then restored after the next. I do wonder if I would have less issues with a Microsoft Surface devices with Snapdragon Processors.

What tools you can run?

There are a number of tools now available that can support running models on the NPU to get use of the hardware. This is not exhaustive but the tools I have used so far.

Microsoft Foundry Local

Foundry Local is a tool that allows you to run models locally on your device either using CPU, GPU and NPU processors - you have the option to run these locally as you can probably guess without AI Foundry dependencies and serve models for applications to consume through an API - for details check out this reference: Get started with Foundry Local | Microsoft Learn

Today, you can run models locally with the NPU, the list has recently grown to include more Phi-3 model series such as:

- phi-3.5-mini-128k-instruct-qnn-npu

- phi-3-mini-128k-instruct-qnn-npu

- phi-3-mini-4k-instruct-qnn-npu

Other NPU supported models include:

- deepseek-r1-distill-qwen-14b-qnn-npu

- deepseek-r1-distill-qwen-7b-qnn-npu

- Phi-4-mini-reasoning-qnn-npu

- qwen2.5-1.5b-instruct-qnn-npu

- qwen2.5-7b-instruct-qnn-npu

I am focusing on the phi-3.5-mini-128k-instruct-qnn-npu model and appear to be getting reasonable results from small “chats” with the SLM. As example, I ran: “Write a PowerShell script that will list out the files within a specified folder.” for my prompt for the model and produced the following result with the result and a variation with error handing:

> foundry model run phi-3.5-mini-128k-instruct-qnn-npu

# Define the path to the folder you want to inspect

$FolderPath = "C:\Path\To\Your\Folder" # Replace this with your folder path

# Check if the folder exists

if (Test-Path $FolderPath) {

# List all files in the folder

Get-ChildItem -Path $FolderPath -Recurse

} else {

Write-Host "Folder does not exist."

}This is a simple example, as a test running in interactive model, but there is an API behind foundry local that can be used to run prompt requests within applications to test them.

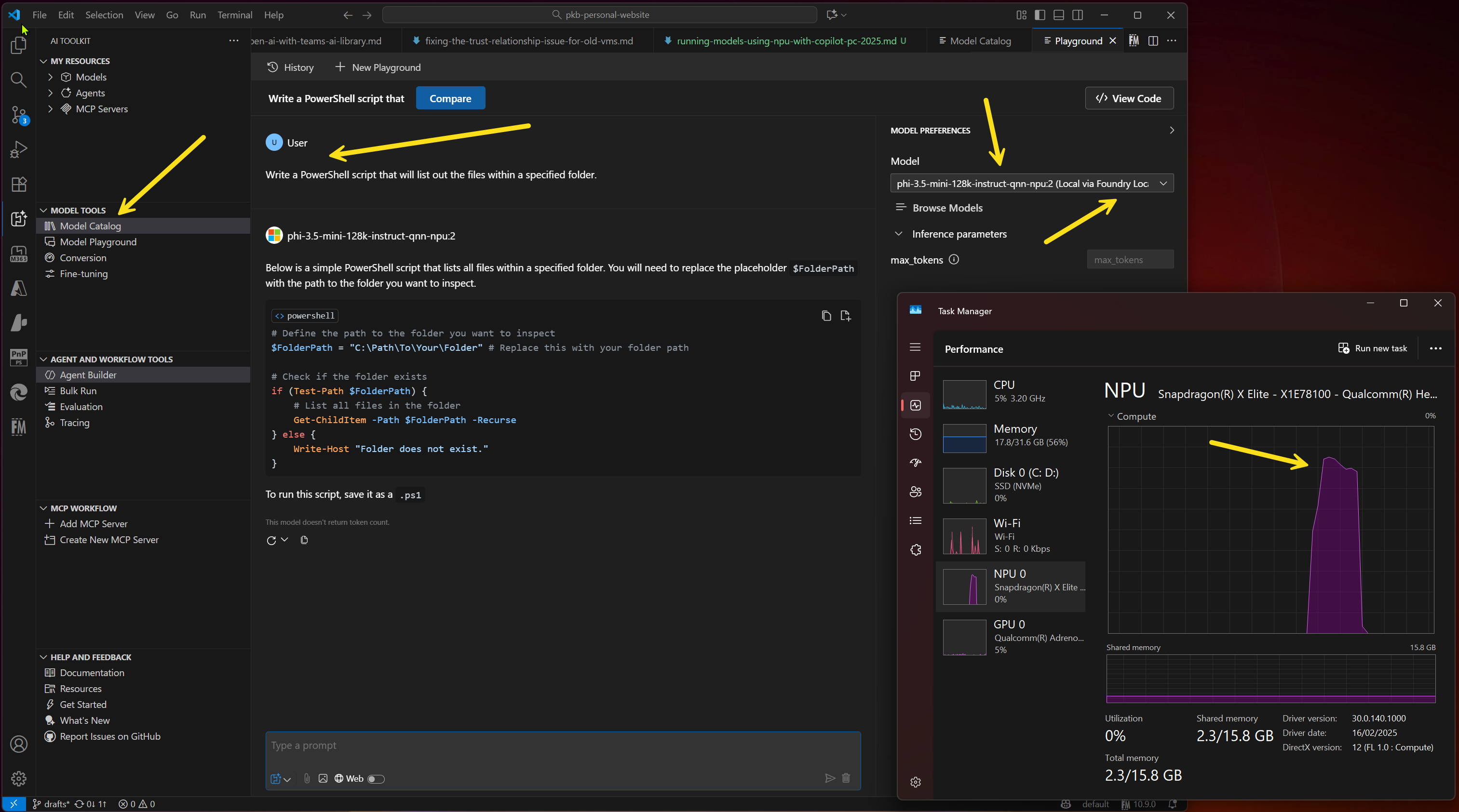

Visual Code Extension: AI Toolkit

AI Toolkit is a extension in Visual Studio Code, providing a range of capabilities and options to run models from a variety of sources integration into Foundry Local and running models in the cloud if required. for this example, I am running the same phi-3.5-mini-128k-instruct-qnn-npu model with the following prompt “Write a PowerShell script that will list out the files within a specified folder.” in the playground:

# Define the path to the folder you want to inspect

$FolderPath = "C:\Path\To\Your\Folder" # Replace this with your folder path

# Check if the folder exists

if (Test-Path $FolderPath) {

# List all files in the folder

Get-ChildItem -Path $FolderPath -Recurse

} else {

Write-Host "Folder does not exist."

}What is nice, is there is growing integration into Foundry Local, for running the models, but with the advantage of the chat and agent playgrounds that can be run locally.

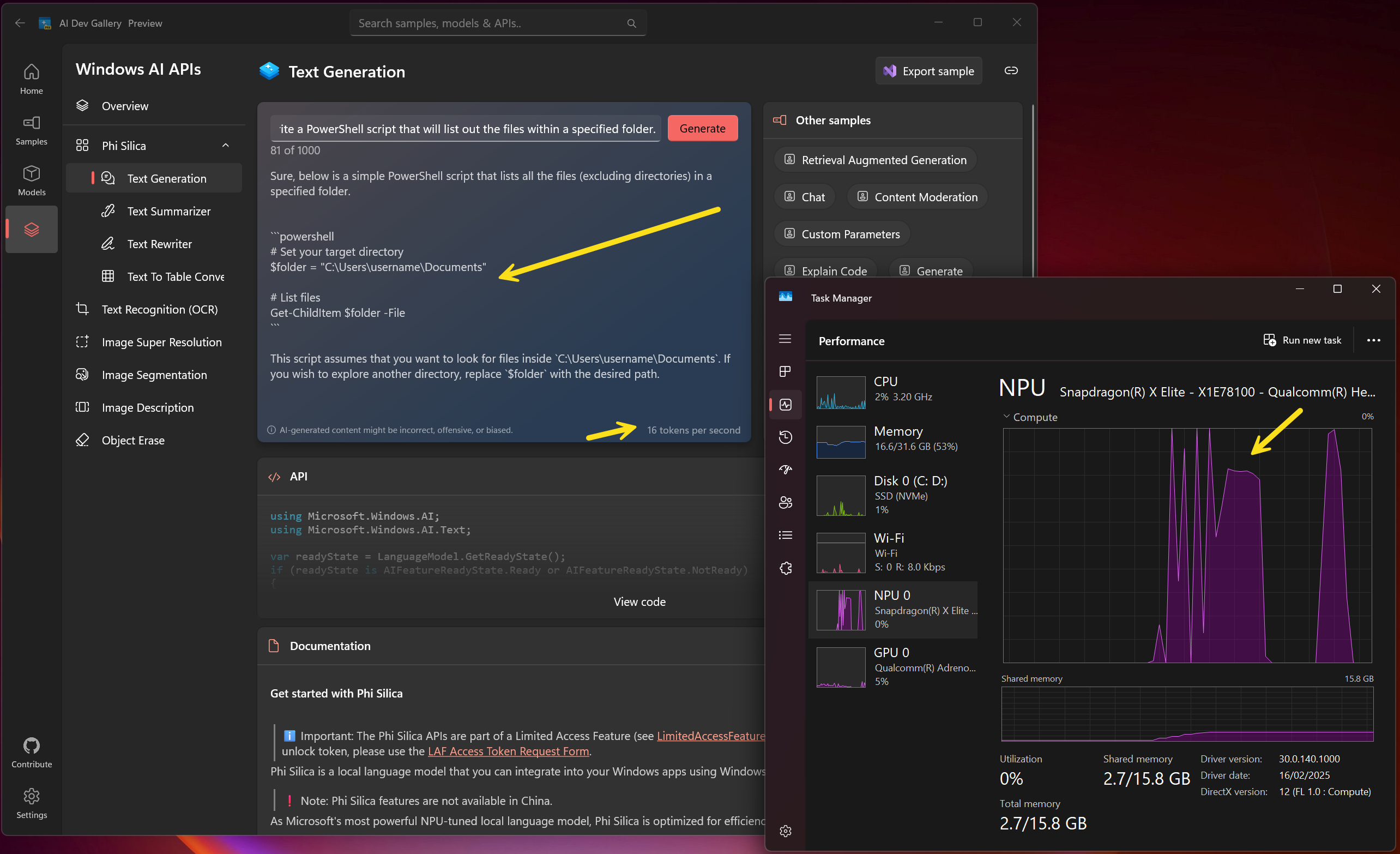

AI Dev Toolkit - Windows AI Foundry

This is a Windows Store app that takes advantage of the Windows AI APIs and AI features in Copilot+ Based PCs to obscure away the complexity required to run models.

Phi Silica for text generation, summarisation, rewriting, table conversion - this is a special NPU-tuned model dedicated to running on Copilot+ PCs.

Further model support for:

- Text Recognition such as OCR to read text from an image

- Image Super Resolution - make lower resolution images scale to higher resolutions

- Image Segmentation

- Image Description

- Object Erase

I look forward to the local semantic search capabilities currently in Private Preview, appears to offer RAG capabilities with local knowledge in essence a local semantic index in which LLM can be grounded to provide responses based on local data. More (ad limited) information on this capability - Semantic Search and Knowledge Retrieval (PRIVATE PREVIEW) | Microsoft Learn

So let’s repeat the same test for Phi Silica (This isn’t the same model and is the only one available to use) and generate the PowerShell script using the prompt: “Write a PowerShell script that will list out the files within a specified folder.” . The interface is a sample application the AI Dev Toolkit provides, and this is the following result:

# Set yor target directory

$folder = "C:\Users\username\Documents"

# List files

Get-ChildItem $folder -FileThis isn’t an entirely fair comparison, but you can see the demonstration that in this case, inference can be run locally on questions such as this type - and this tool shows that this is producing around 16 tokens per second for the result.

The use case for this type of capability isn’t the same and this tooling offers more, range of capabilities as part of the API, such as generation, summarisation, image manipulation and OCR features.

Conclusion

Generally, the good news is recently we can now run local models, whilst they are generally smaller, of course they have to be, they are reasonably capable of tasks. Possibly, when creating applications, that setting expectations that certain capabilities will be limited in local or offline modes. These are still PREVIEW capabilities in many of the tooling but if feels now we are heading much closer to GA.

I firmly believe that there is such range in models, local and cloud, vendor to vendor, it is a matter of using the right model for the right job. Whilst there are some general models, they cannot do everything to the level of depth needed, and there are types of use cases that require a more specific model that has been designed and trained to perform that task.

Useful References

I have been building a set of references for learning about running applications using the NPU, here are some useful references:

- Foundry Local architecture | Microsoft Learn

- Foundry Local REST API Reference | Microsoft Learn

- What are Windows AI APIs? | Microsoft Learn

- Run ONNX models using the ONNX Runtime included in Windows ML | Microsoft Learn

- Windows ML APIs | Microsoft Learn

- Leveraging the power of NPU to run Gen AI tasks on Copilot+ PCs | Surface Blog

- Windows on Snapdragon | Qualcomm

- QNN Execution Provider | Onnx Runtime

- Develop AI applications for Copilot+ PCs | Microsoft Learn

Enjoy!